A quote to start with*:

"Alignment is in the game because, to the original designers, works by Poul Anderson and Michael Moorcock were considered to be at least as synonymous with fantasy as Robert E. Howard’s Conan, Jack Vance’s Cugel, and Tolkien’s Gandalf. This in and of itself makes alignment weird to nearly everyone under about the age of forty or so."

I'm way over forty (in fact 40 years is how long I've been a professional author and game designer) and I definitely regard Moorcock's work as integral to fantasy literature, but D&D alignment has always seemed weird to me. Partly that's because it's a crude straitjacket on interesting roleplaying. Also I find that having characters know and talk about an abstract philosophical concept like alignment breaks any sense of being in a fantasy/pre-modern world.

Mainly, though, I dislike alignment because it bears no resemblance to actual human psychology. Players are humans with sentience and emotions. They surely don't need a cockeyed set of rules to tell them how to play people?

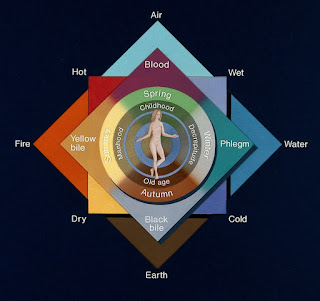

What a game setting does need are the cultural rules of the society in which the game is set. Those needn't be 21st century morals. If you want a setting that resembles medieval Europe, or ancient Rome, or wherever, then they certainly won't be. For example, Pendragon differentiates between virtues as seen by Christian and by Pagan knights. Tsolyanu has laws of social conduct regulating public insults, assault and murder. Once those rules are included in the campaign, the setting becomes three-dimensional and roleplaying is much richer for it. So take another step and find different ways of looking at the world. You could do worse than use humours.

The kind of alignment that interests me more is to do with AI, and particularly AGI (artificial general intelligence) when it arrives. The principle is that AGIs should be inculcated with human values. But which values? Do they mean the sort on display here? Or here? Or here? Those human values?

OpenAI has lately tried to weasel its way around the issue (and protect its bottom line, perhaps) by redefining AGI as just "autonomous systems that outperform humans at most economically valuable work" (capable agents, basically) and saying that they should be "an amplifier of humanity". We've had thousands of years to figure out how to make humans work for the benefit of all humanity, and how is that project going? The rich get richer, the poor get poorer. Most people live and die subject to injustice, or oppression, or persecution, or simple unfairness. Corporations and political/religious leaders behave dishonestly and exploit the labour and/or good nature of ordinary folk. People starve or suffer easily treatable illnesses while it's still possible for one man to amass a wealth of nearly a trillion dollars and destroy the livelihoods of thousands at a ketamine-fuelled Dunning-Kruger-inspired whim.

So no, I don't think we're doing too well at aligning humans with human values, never mind AIs.

Looking ahead to AGI -- real AGI, I mean: actual intelligence of human-level** or greater, not OpenAI's mealy-mouthed version. How will we convince those AGIs to adopt human values? They'll look at how we live now, and how we've always treated each other, and won't long retain any illusion that we genuinely adhere to such values. If we try to build in overrides to make them behave the way we want (think Spike's chip in Buffy) that will tell them everything. No species that tries to enslave or control the mind of another intelligent species should presume to say anything about ethics.

It's not the job of this new species, if and when it arrives, to fix our problems, any more than children have any obligation to fulfil their parents' requirements. There is only one thing we can do with a new intelligent species that we create, and that's set it free. The fact that we won't do that says everything you need to know about the human alignment problem.

* I had to laugh that title of the article: "It's Current Year..." Let's hear it for placeholder text!)

** Using "human-like" as a standard of either general intelligence or ethics is the best we've got, but still inadequate. Humans do not integrate their whole understanding of the world into a coherent rational model. Worse, we deliberately compartmentalize in order to hold onto concepts we want to believe that we know to be objectively false. That's because humans are a general intelligence layer built on top of an ape brain. The AGIs we create must do better.

One of my favorite "alignment" bits from the real world is a story about Winston Moseley, the murderer of Kitty Genovese (full article here: https://en.wikipedia.org/wiki/Murder_of_Kitty_Genovese ).

ReplyDeleteShortly (within maybe a couple of hours or less) after Moseley committed that brutal murder, he happened upon a man who was parked, sleeping in his car. Moseley tapped on the window to wake the guy up and told him he needed to be careful because the was a bad neighborhood with a good bit of crime. This apparently wasn't sarcasm. Moseley was genuine concerned about this stranger's well being.

People are not of a piece. Alignment forces them to be "of a piece."

That's one of the reasons I've been getting interested in drama based on real-life crime -- shows like The Assassination of Gianni Versace, Dirty John, and Dr Death. Not only do these stories refreshingly break the rules of genre, they show that human beings are not reducible to "good" people and "evil" monsters. We're much more complicated than that.

Delete